The future of AI is context-aware and happening right at the Edge. AI is moving to the Edge so data can be processed locally—closer to where it’s generated—which reduces latency, lowers bandwidth costs, enhances privacy, and enables faster, more responsive user experiences.

But unlocking this potential has been hampered by market fragmentation and the struggle to integrate advanced AI capabilities into resource-constrained IoT devices. Proprietary software, closed ecosystems, and software innovation outpacing silicon advancements have held back broad adoption and development. A new class of Edge silicon is clearly needed to deliver the sophisticated AI intelligence demanded by today’s evolving applications, including multimodal contextual Large Language Models (LLMs).

Introducing Torq

Synaptics Torq™ is a groundbreaking Edge AI platform, powered by open-source technology from Google Research, that drives a new era of highly intelligent, context-aware devices and underscores our commitment to advancing the future of Edge AI.

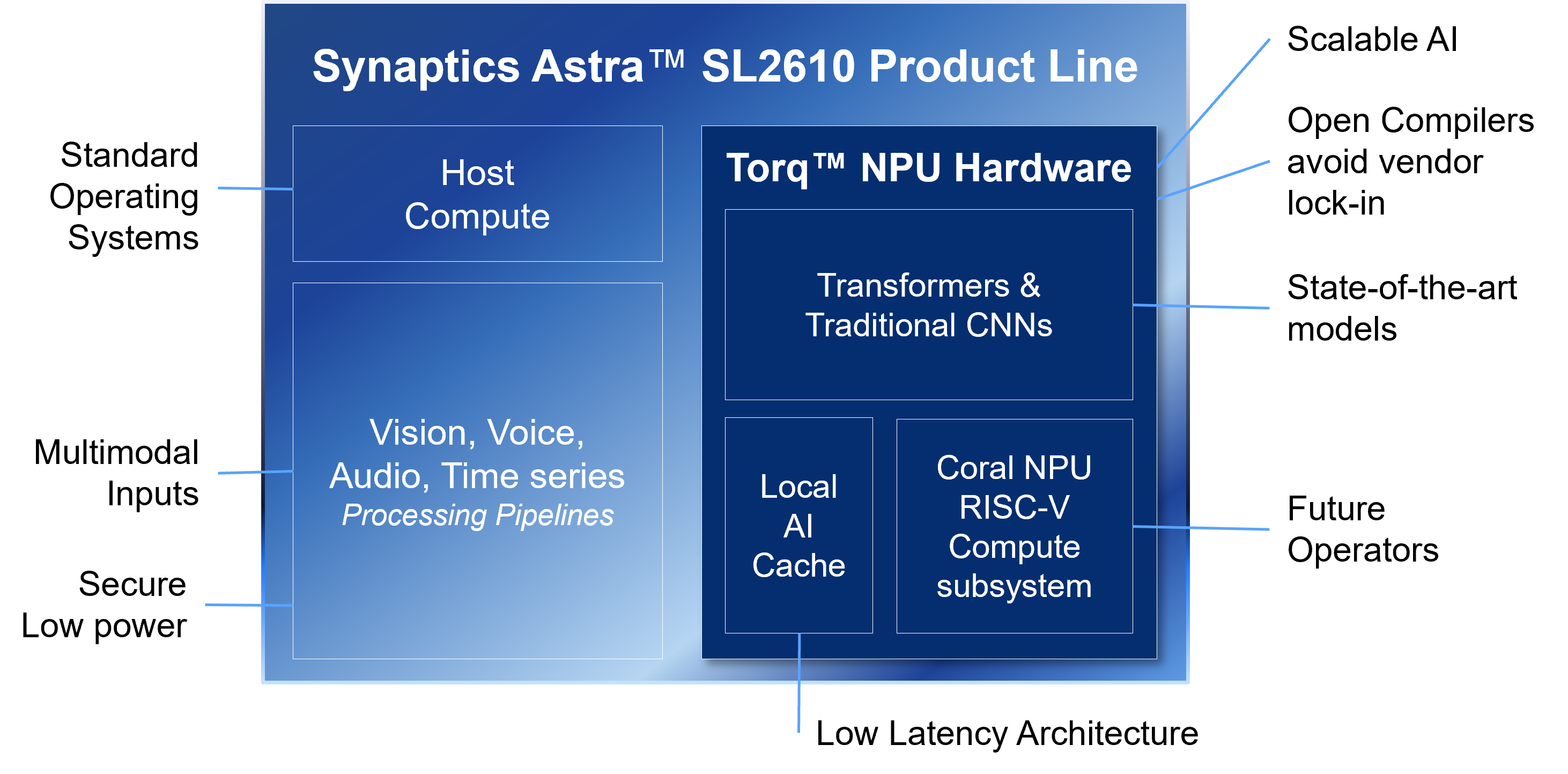

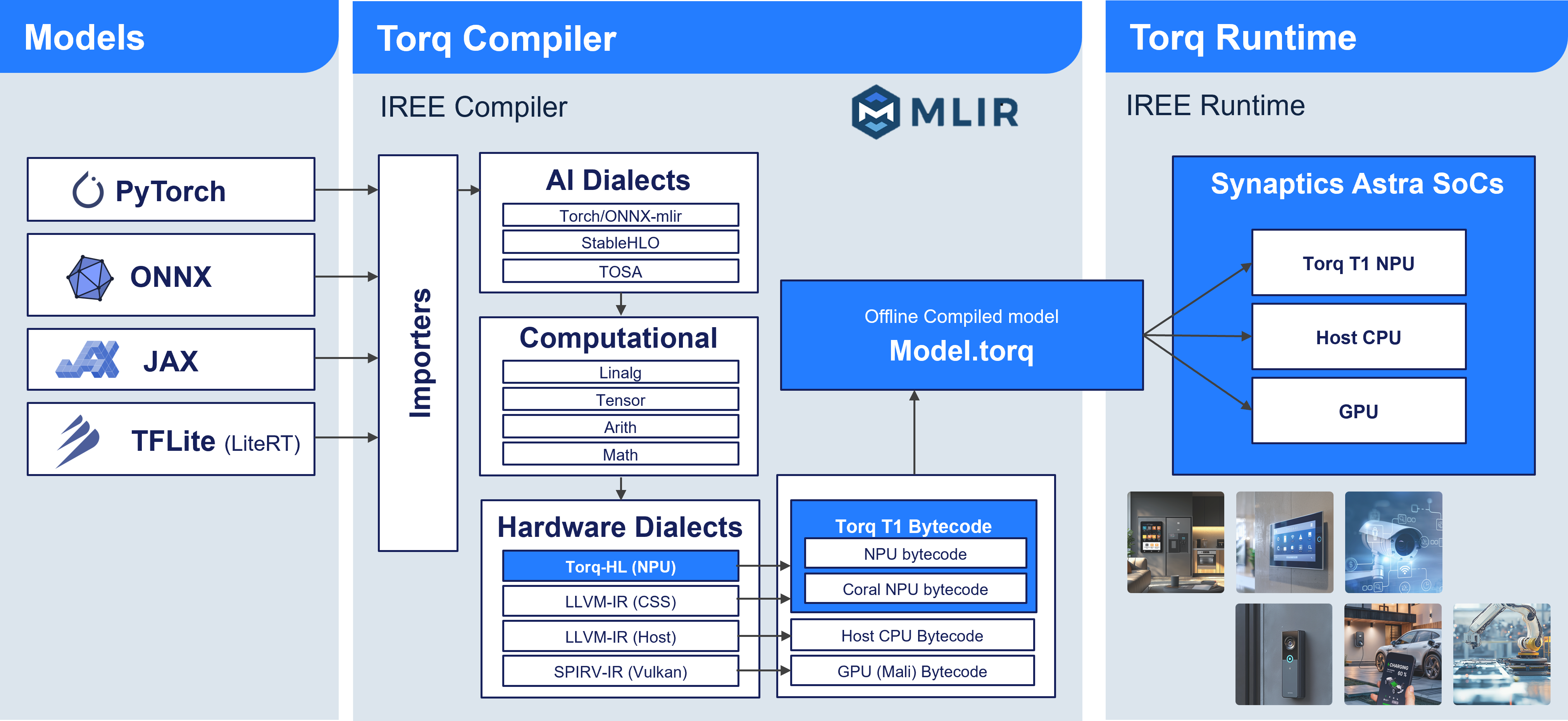

Torq combines core elements of NPU hardware and associated software to set a new standard in IoT application development. Delivered as part of Synaptics’ Astra™ line of AI-Native processors, the Torq line of NPUs is the result of close collaboration with Google Research and integrates their open-source Coral NPU. The software components include the Torq-compiler and the Torq-runtime, based on the open-source IREE/MLIR end-to-end compiler framework.

Torq Hardware

The hardware component of the Torq platform is an NPU subsystem, with a unique and scalable architecture. This neural network accelerator is optimized for low-latency and high-throughput execution of deep learning workloads, including Convolutional Neural Network (CNN) and Transformer models. It integrates the Google Coral NPU, a dedicated RISC-V processor implementation, designed to handle new or unsupported operations. This creates a tiered processing system intelligently managed with a flexible programming model between the fully accelerated compute engines, the RISC-V core and the dual-core Arm host processor, delivering optimal resource utilization.

Torq Software

The software is the second half of the Torq platform, offering a compiler, runtime, build framework, and other tools to leverage the NPU. It enables developers to create multimodal AI applications for vision, audio, and voice that run seamlessly on the NPU.

The differentiating aspect of Torq is its open-source compiler, developed in partnership with Google. This approach directly contrasts with offerings from other vendors, whose AI compilers are typically proprietary and closed source. By making the compiler and toolchain open source, Synaptics and Google aim to create a more accessible ecosystem for developers – a major advancement for the Edge IoT ecosystem.

Real-World Performance Metrics

Torq shifts the focus from raw TOPS (Tera Operations Per Second) numbers—which often mislead—to metrics that reveal real device efficiency. Torq is designed to deliver optimal inference time for models – a more accurate and relevant measure of performance for real-world use cases.

A Shared Vision: Openness and Efficiency at the Core

Both Synaptics and Google, working independently, converged on a singular vision for overcoming the challenges of Edge AI: openness and efficiency.

Google’s Coral NPU: Pioneering Edge Compute Architectures

Since 2016, Google Research has focused on advancing Edge AI by co-designing hardware and software for applications like smart speakers and Google Assistant. The experience gained from the resulting Coral products highlighted a clear market need for a platform that could support ML frameworks beyond TensorFlow Lite. At the same time, the rise of Large Language Models (LLMs) created a significant opportunity to bring powerful generative AI to the Edge.

Coral NPU is the direct response to these drivers. It's a unified, open-source platform built on the RISC-V standard that integrates Google's foundational work in open ML compilers (like IREE) and enhanced security. Google believes this open approach is the best way to build a robust and innovative edge AI ecosystem, encouraging wide adoption and industry-wide collaboration.

Empowering Developers: Key Benefits for AI-Native Edge IoT Product Development

The Synaptics and Google partnership is fundamentally about empowering developers, offering a robust and open environment for creating next-generation AI-native Edge IoT products.

Open & Standardized Development Environment

This collaboration shifts Edge AI away from fragmented, proprietary ecosystems.

- Freedom from Lock-in: Based on the open-source IREE/MLIR compiler and runtime, Torq helps developers avoid opaque toolchains, licensing fees, and access restrictions.

- Flexible & Programmable: The C-programmable front end supports custom kernels and leading ML frameworks like PyTorch, ONNX, JAX, TensorFlow and LiteRT

- Continuous Improvement: Community-influenced development enables faster iteration, better efficiency, and ongoing enhancements.

Scalability & Future Proofing

Built to keep pace with evolving AI.

- Adaptable Architecture: Scales from small IP to hundreds of TOPS and supports transformers, LLMs, and is designed to support future operators efficiently.

- Heterogeneous Computing: Tasks not handled by the NPU can be flexibly handled on the RISC-V cores, GPUs or the host Arm cores, ensuring models always run.

Developer Ecosystem & Support

Beyond hardware, developers gain rich tools and resources.

- SDKs & Tools: Full Astra AI-Native SDKs for multiple OS platforms on GitHub.

- Optimized Resources: Extensive documentation, pre-optimized models, multimodal AI apps, and curated developer journeys via developer.synaptics.com

The Path Ahead: Expanding the Edge AI Ecosystem

The Synaptics–Google partnership is building an open, evolving ecosystem for AI-native devices. Through open-source innovation, we’re accelerating tailored SoCs that scale from tiny edge devices to cloud-based LLMs—unlocking sophisticated models, new device classes, and richer experiences across consumer and industrial markets.

Abdel Younes joined Synaptics in 2019 and currently serves as the Sr. Director, Machine Learning Frameworks & Apps. His career spans a diverse range of domains—from aerospace research and open-source development to powerline communication, multimedia, and artificial intelligence. This broad technical foundation is matched by Abdel’s strong leadership and management capabilities, developed through years of experience driving innovation across multidisciplinary teams. His expertise continues to evolve as he remains deeply engaged with emerging technologies and their real-world applications. Abdel holds both a Master of Science and a Ph.D. in Signal Processing, Telecommunications, and Navigation from ENAC – École Nationale de l'Aviation Civile.